| Effective Date: |

19/12/2024 |

Review Date: |

As Required |

| Document Status: |

PUBLISHED |

Original Version: |

2024.1 |

| Consultation Period Opened: |

13/12/2024 |

Consultation Period Closed: |

16/12/2024 |

| Authorised by: |

Chief Executive Officer |

Document Owner: |

Alexander Mueller |

WARNING! – UNCONTROLLED WHEN PRINTED! – THE ORIGINAL/LATEST/CURRENT VERSION OF THIS DOCUMENT IS KEPT IN THE I.T.S POLICY AND PROCEDURES MANUAL LOCATED IN THE STAFF PORTAL.

01. Objective

This document provides assessors with clear guidelines for identifying AI-generated responses and outlines the procedures to ensure student submissions are authentic. Maintaining academic integrity ensures that assessments accurately reflect a student’s knowledge and capabilities. Cheating among students may increase the prevalence of academic cheating and damage VET’s reputation domestically and internationally.

02. Identifying AI-Generated Responses

Assessors should consider the following characteristics as potential indicators of AI-generated responses:

| # |

Reason |

Weight Points |

Description

|

| 1. |

Overly Structured Responses |

6 |

-

Content is well-organised into sections with headings and subheadings, even when the question does not require such formatting.

-

The response feels generic, lacking specific details, examples, or a personal perspective.

|

| 2. |

Generalised Language |

2 |

-

The answer includes broad, well-rounded explanations without referencing course materials, personal experiences, or specific examples.

-

Language appears overly formal or polished, not matching the student’s typical writing style or their LLN results.

|

| 3. |

Repetition |

2 |

|

| 4. |

Lack of Personal Voice |

2 |

-

The response lacks the student’s unique perspective, examples, or application of knowledge.

-

Use of phrases like “In conclusion” or “It is important to note” frequently and mechanically.

|

| 5. |

Absence of Errors |

2 |

-

The response is free from spelling, grammar, or formatting errors, which starkly contrasts the quality and style of the student’s previous submissions.

- NOTE: Our guidelines allow students to use software to assist them in formatting and spell-checking their work, including re-wording the student’s original responses to ensure clarity.

|

| 6. |

Access to learning materials |

8 |

- Upon reviewing the student’s access logs on the Learner Portal, it was found that the student has not accessed the learning materials for the unit of competence related to this assessment. This indicates a lack of engagement with the required learning resources and applied learning.

|

| 7. |

Lack of Referencing |

3 |

- The student’s response lacks references to the provided learning materials, course content, or any other credible sources. This omission raises concerns about whether the response reflects the student’s own understanding and engagement with the required resources for the unit of competence.

|

| 8. |

Similarity to Online Content |

4 |

|

Important Notice to Assessors:

Each criterion has been assigned a weighted score as part of our guidelines for identifying AI-generated responses. When a student’s response accumulates 10 weight points or more, it must be flagged as a potential AI-generated response.

At this stage, the assessor must proceed to Clause # 03: Procedures to Confirm Authenticity to verify the authenticity of the student’s work. Please ensure all observations and evidence are documented thoroughly to support your decision. Your diligence in maintaining the integrity of assessments is greatly appreciated.

03. Procedures to Confirm Authenticity

If a response is suspected to be AI-generated, follow these steps:

| Step 1) |

Document Observations |

-

Note the specific reasons for suspicion, referencing the characteristics listed above in Point 02. Identifying AI-Generated Responses.

-

Compare the suspected response to the student’s previous work for inconsistencies in style, tone, or depth of understanding.

|

| Step 2) |

Moderate the questions with another assessor |

-

Consult with another assessor within the department to moderate the assessment or review the flagged questions. If both assessors agree that the student’s response is likely AI-generated, proceed to Step 3. If there is disagreement, escalate the matter to the General Manager for further clarification and guidance.

|

| Step 3) |

Request Additional Evidence |

Contact the student to provide supplementary evidence of their knowledge and skills. This may include:

- Handwritten Responses:

- Video/Audio Responses:

- Live Interview

|

| Step 4) |

Cross-Verify Responses |

-

Compare the supplementary evidence with the original response for consistency.

-

Look for alignment in content, tone, and depth of understanding.

|

| Step 5) |

Record Findings |

|

| Step 6) |

Take Action |

Based on findings:

|

04. Best Practices for Assessors

| 1. |

Set Expectations Early: |

|

| 2. |

Design Robust Assessments |

|

| 3. |

Leverage Technology |

- Use plagiarism and AI-detection tools to flag suspicious submissions.

|

| 4. |

Continuous Professional Development |

|

05. Examples

The following examples illustrate what Intelligent Training Solutions defines as appropriate and inappropriate use of AI technology in student work.

|

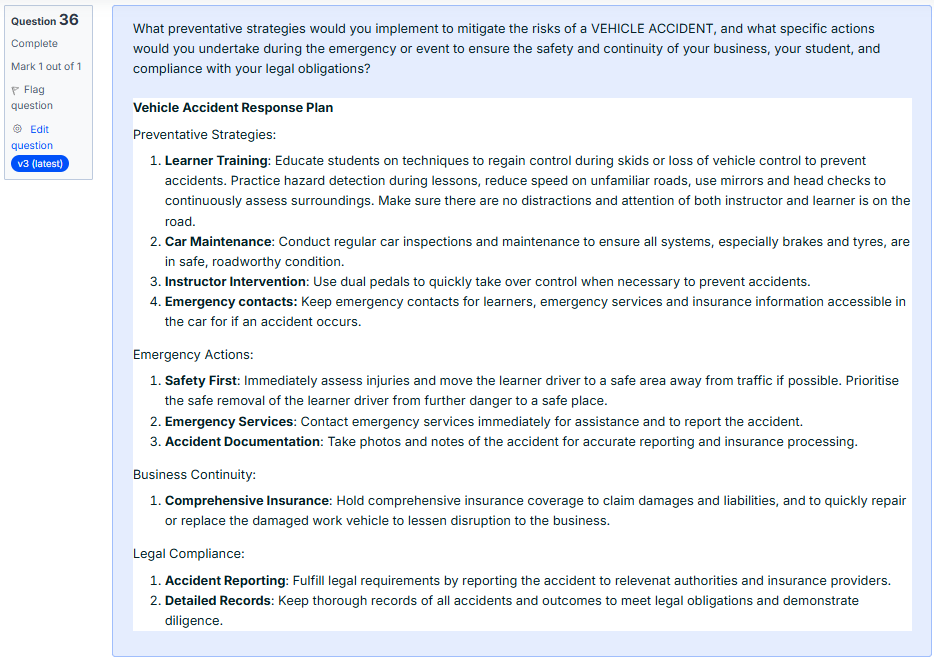

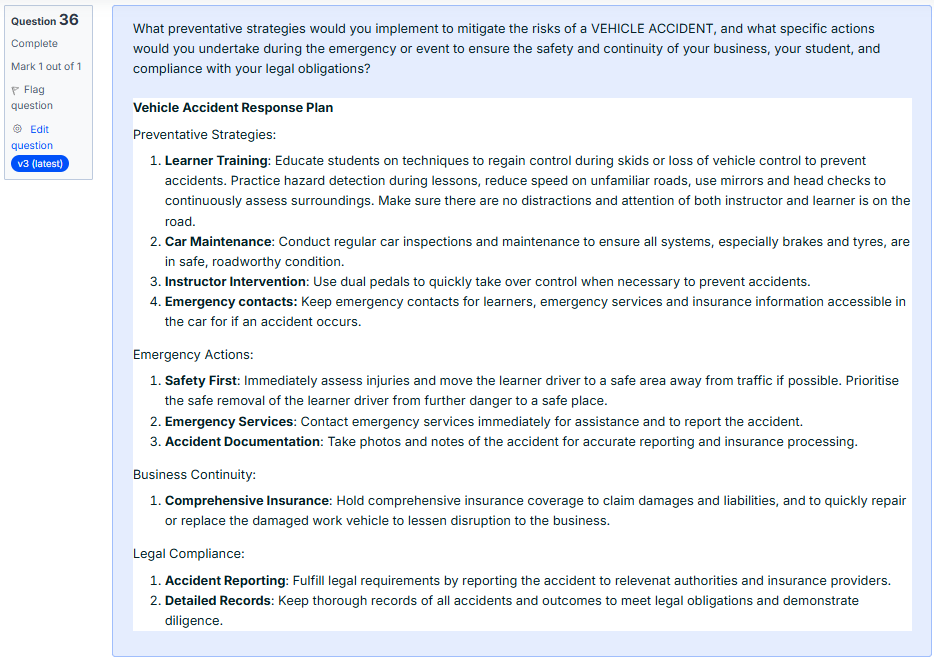

This response appears to be AI-generated based on the following observations::

Background Information: This particular student scored an ACSF score of Level 3.

- The submission is excessively organised, with a formal structure that exceeds the requirements of the question.

- The response provides well-rounded but generalised explanations that lack specific references to course materials, personal experience, or detailed examples.

- The language and tone are overly formal and polished, which is inconsistent with what would be expected from a student with an ACSF Level 3 LLN score. Such responses are more typical of students with ACSF Level 5.

- The response is missing unique insights, examples, or personal perspectives that demonstrate the student’s own understanding and application of the topic.

- While an error-free submission alone does not indicate AI involvement, combined with the other factors, this further suggests an AI-generated response.

- When the same question is entered into AI software (e.g., ChatGPT), the resulting responses closely match the language, structure, and phrasing of the student’s submission.

Action Plan Summary:

- Inform the student that their response has been flagged as potentially AI-generated, providing specific reasons for the concerns.

- Emphasise the importance of authentic work and the RTO’s responsibility to verify submissions.

- Request supplementary evidence, such as handwritten responses, a video or audio explanation, or participation in a live interview (to be used as a last option only), to confirm the authenticity of the work.

|

|

|

|

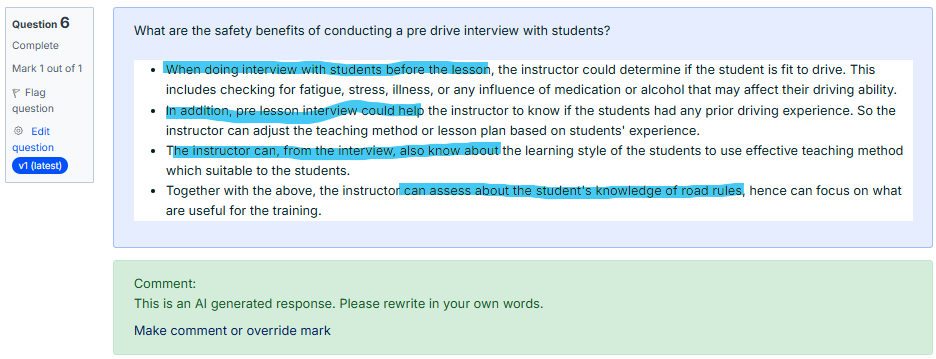

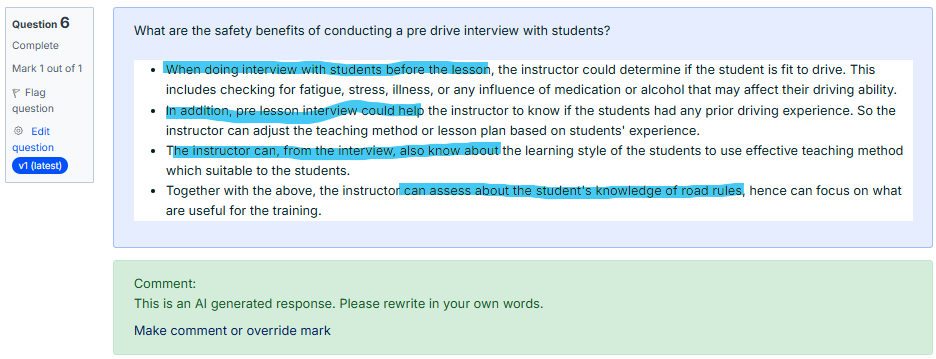

This response should not be considered AI-generated based on the following observations.

- The student has referenced the learning materials, as the dot points in their response align closely with content covered in the presentations and webinars for this unit.

- The language used in the response, highlighted in blue, reflects the student’s verbal style. It lacks the polished grammar and phrasing typically associated with AI-generated content, such as that produced by ChatGPT.

- The response is consistent with the question, staying on topic without introducing extra details. Additionally, the simple formatting matches what is commonly seen in learner submissions using the student portal.

|

06. Guideline Approval and Publication

This guideline was approved via a consultation process conducted by a panel of assessors consisting of six qualified members. This guideline has been approved for publication and use by the Chief Executive Officer, Alexander Mueller.

SIGNATURE | DATE: 16/12/2024

Revision History

POLICY DOCUMENTATION REGISTER

| REVISION |

DATE |

DOCUMENT VERSION |

DESCRIPTION OF MODIFICATION |

| 1 |

December 2024 |

2024.1 |

- Draft copy distributed for consultation and review

|

| 2 |

16/12/2024 |

2024.1 |

- Consultation completed. Recommendations included assigning weighted points to Clause 02. A total of 10 points will flag a response as AI-generated, requiring the student to provide additional evidence to support their evidence.

- Two additional items were added to Clause 02. They are: 1) Referencing and 2) Learning Material Access Logs.

- The weighted points were assigned to each criteria via a panel of assessors. Emailed 16/12/2024 @ 12:37 pm. Outcomes are recorded on the Compliance & Management Register.

|

| 3 |

19/12/2024 |

2024.1 |

|

|

|

|

|